bagging machine learning ppt

A training set of N examples attributes class label pairs A base learning model eg. The bagging technique is useful for both regression and statistical classification.

The process of bagging is very simple yet often quite powerful.

. Bagging is only effective when using unstable ie. Value 4 chosen empirically combine using voting some results - bp c45 components some theories on baggingboosting error. Bagging is similar to Divide and conquer.

Bagging bootstrap aggregation Adaboost Random forest. Bayes optimal classifier is an ensemble learner bagging. 1 Arching adaptive reweighting and combining is a generic term that refers to reusing or selecting data in order to improve classification.

Bagging also known as bootstrap aggregating is the aggregation of multiple versions of a predicted model. Each model is trained individually and combined using an averaging process. Once the results are predicted you then use the.

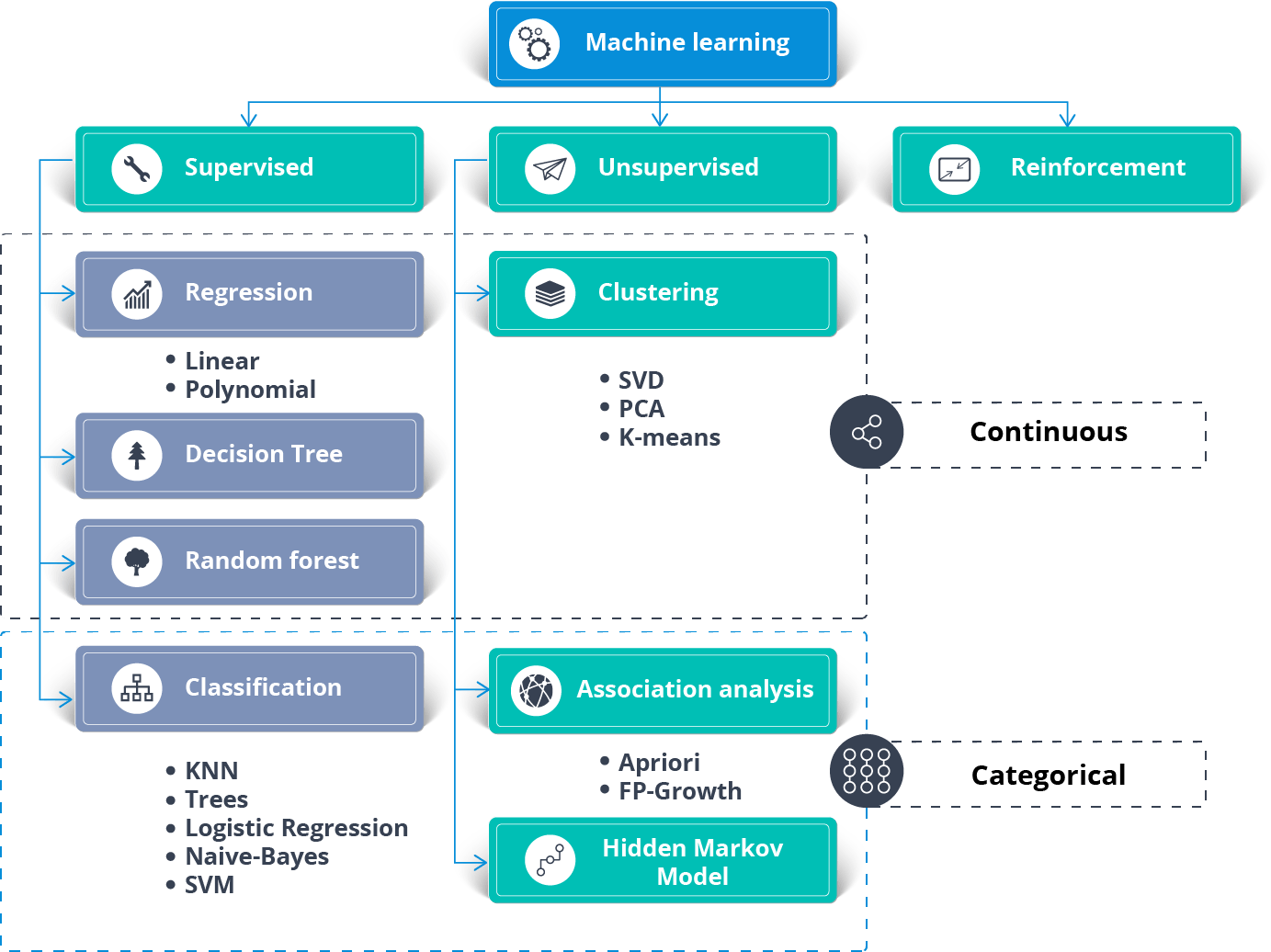

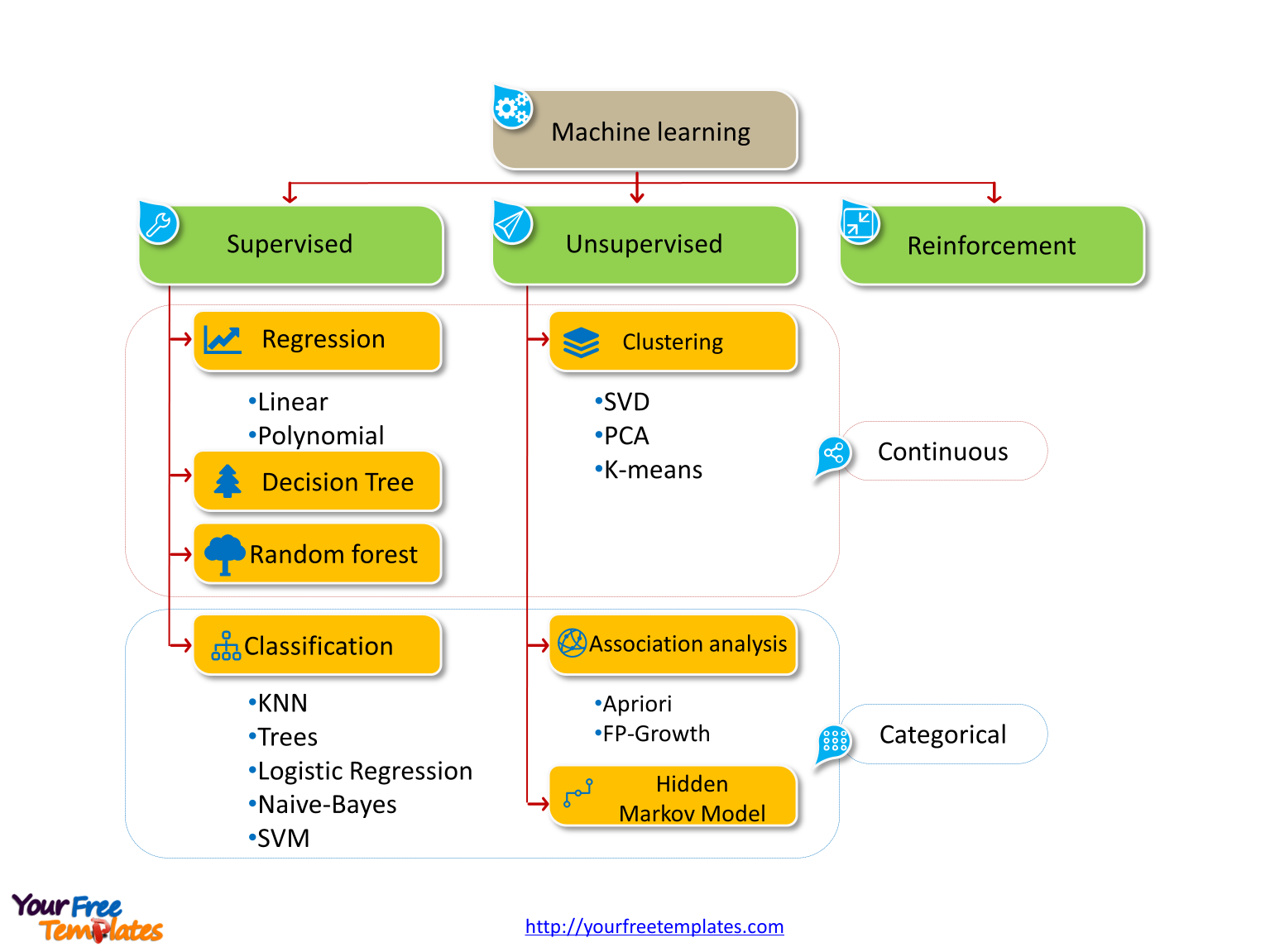

Here it uses subsets bags of original datasets to get a fair idea of the overall distribution. Machine Learning CS771A Ensemble Methods. In the first section of this post we will present the notions of weak and strong learners and we will introduce three main ensemble learning methods.

Our new CrystalGraphics Chart and Diagram Slides for PowerPoint is a collection of over 1000 impressively designed data-driven chart and editable diagram s guaranteed to impress any audience. Generally these are used in regression as well as classification problems. Bagging Overcomes Classifier Instability Unstable if small changes in training data lead to significantly different classifiers or large changes in accuracy Decision Tree algorithms can be unstable Slight change in the position of a training point can lead to a radically different tree Bagging improves recognition for unstable.

Boosting - arcing sample data set like bagging but probability of data point being chosen weighted like boosting mi number of mistakes made on point i by previous classifiers probability of selecting point i. Bagging techniques are also called as Bootstrap Aggregation. Seminal Paper BREIMAN Leo 1996.

Bootstrap aggregating Each model in the ensemble votes with equal weight Train each model with a random training set Random forests do better than bagged entropy reducing DTs Bootstrap estimation Repeatedly draw n samples from D For each set of samples estimate a statistic The bootstrap. Bootstrapping Bootstrapping is commonly used in statistical problems to approximate the variance of an estimator. Many of them are also animated.

Bagging is used with decision trees where it significantly raises the stability of models in improving accuracy and reducing variance which eliminates the challenge of overfitting. On average each bootstrap sample has 63 of instances Encourages predictors to have uncorrelated errors This is why it works Intro AI Ensembles Bagging Details 2 Usually set Or use validation data to pick The models need to be unstable. Bayes optimal classifier is an ensemble learner Bagging.

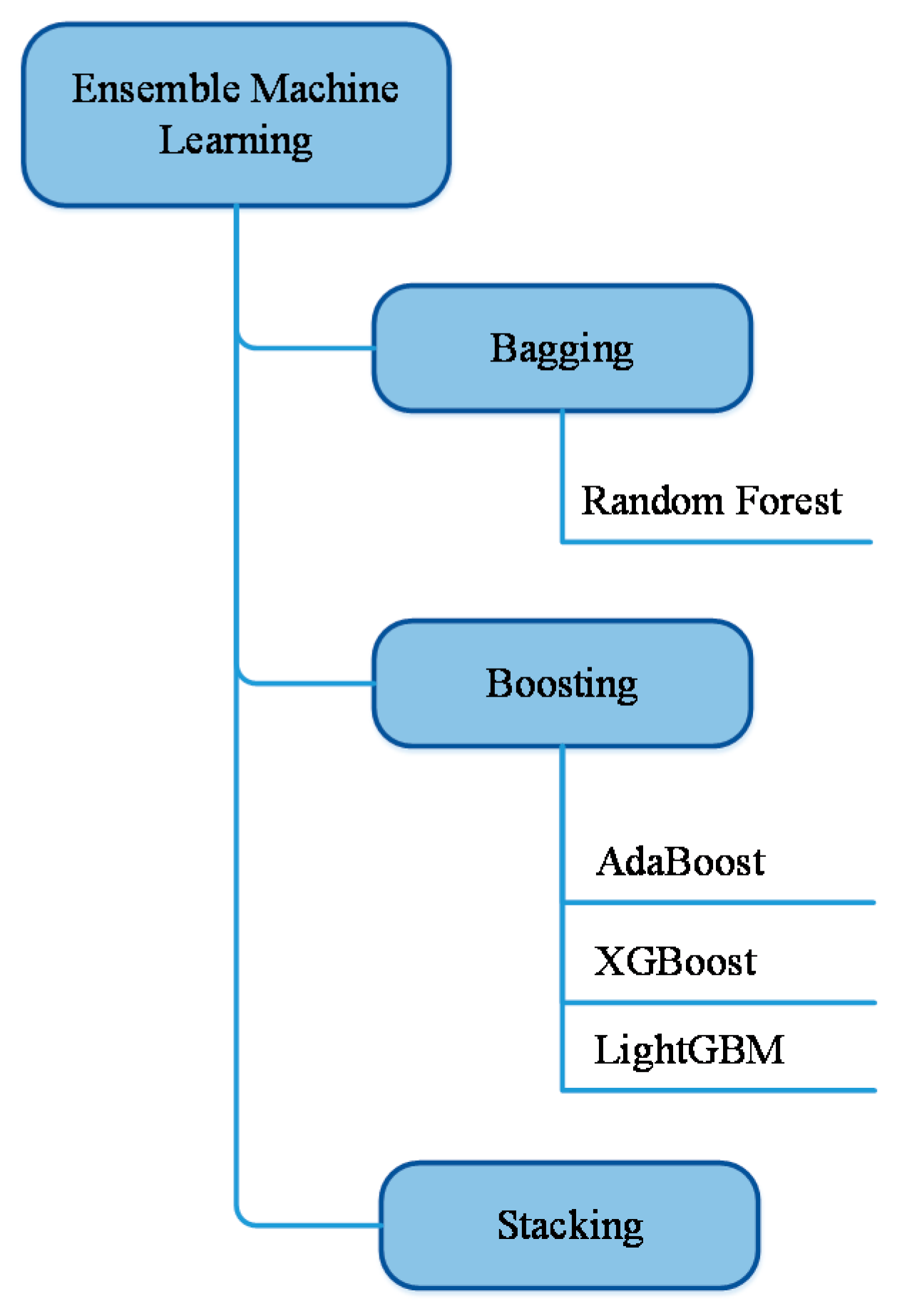

Bagging boosting and stacking. It is a group of predictive models run on multiple subsets from the original dataset combined together to achieve better accuracy and model stability. Bootstrap aggregating each model in the ensemble votes with equal weight train each model with a random training set random forests do better than bagged entropy reducing dts bootstrap estimation repeatedly draw n samples from d for each set of samples estimate a statistic the bootstrap.

Ian Chang Created Date. A decision tree a neural network Training stage. Bagging and Boosting 4 Bagging Bagging stands for Bootstrap Aggregation Takes original data set D with N training examples Creates M copies fD mgM m1 Each D mis generated from D.

Packaging machines also include related machinery for sorting counting and accumulating. Packaging Machine 1 - A Packaging Machine is used to package products or components. Lets assume we have a sample dataset of 1000 instances x and we are using the CART algorithm.

Furthermore there are more and more techniques apply machine learning as a solution. A small change in the training set can cause a significant change in the model nonlinear models. A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual predictions either by voting or by averaging to form a final prediction.

11 CS 2750 Machine Learning AdaBoost Given. And then you place the samples back into your bag. To understand bagging lets first understand the term bootstrapping.

Ensemble machine learning can be mainly categorized into bagging and boosting. In the future machine learning will play an important role in our daily life. Train a sequence of T base models on T different sampling distributions defined upon the training set D A sample distribution Dt for building the model t is.

Then in the second section we will be focused on bagging and we will discuss notions such that bootstrapping bagging and random forests. Bagging Bagging short for bootstrap aggregating combines the results of several learners trained on bootstrapped samples of the training data. They are all artistically enhanced with visually stunning color shadow and lighting effects.

You take 5000 people out of the bag each time and feed the input to your machine learning model. Bagging meta-estimator Random Forest. Each bootstrap sample is used to train a different component of base classifier Classification is done by plurality voting Bagging Regression is done by averaging Works for unstable classifiers Neural Networks Decision Trees Bagging Kuncheva Example.

Bootstrap Aggregation Create Bootstrap samples of a training set using sampling with replacement. PowerPoint 簡報 Last. The primary focus of bagging is to achieve less variance than any model has individually.

Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees. This product area includes equipment that forms fills seals wraps cleans and packages at different levels of automation. Types of bagging Algorithms There are mainly two types of bagging techniques.

What Is Machine Learning Visual Explanations Data Revenue

Types Of Machine Learning Algorithms Ppt Online 50 Off Www Propellermadrid Com

Visuals For Ai Machine Learning Presentations Ppt Template In 2022 Machine Learning Machine Learning Deep Learning Ai Machine Learning

Sdm6a A Web Based Integrative Machine Learning Framework For Predicting 6ma Sites In The Rice Genome Molecular Therapy Nucleic Acids

Reporting Of Prognostic Clinical Prediction Models Based On Machine Learning Methods In Oncology Needs To Be Improved Journal Of Clinical Epidemiology

What Is Machine Learning Visual Explanations Data Revenue

What Is Machine Learning Visual Explanations Data Revenue

Application Of Machine Learning For Advanced Material Prediction And Design Chan Ecomat Wiley Online Library

What Is Machine Learning Visual Explanations Data Revenue

Mathematics Free Full Text A Comparative Performance Assessment Of Ensemble Learning For Credit Scoring Html

Evolutionary Machine Learning A Survey

Types Of Machine Learning Algorithms Ppt Deals 57 Off Www Propellermadrid Com

Types Of Machine Learning Algorithms Ppt Deals 57 Off Www Propellermadrid Com

Bagging Vs Boosting In Machine Learning Geeksforgeeks

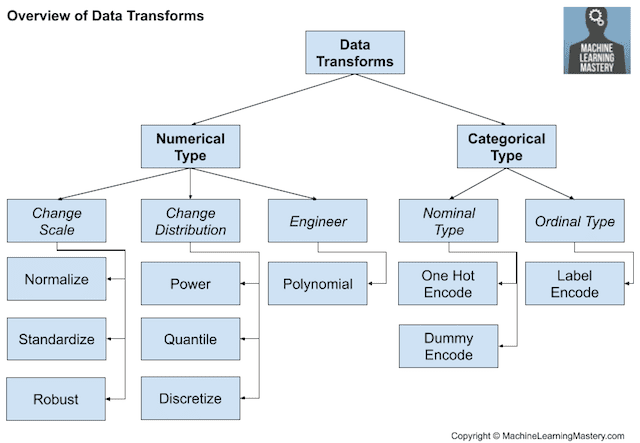

一文讲解特征工程 经典外文ppt及中文解析 云 社区 腾讯云 Machine Learning Learning Development

Outline Of The Integration Of Blockchain And Machine Learning For Download Scientific Diagram

Types Of Machine Learning Algorithms Ppt Online 50 Off Www Propellermadrid Com

14 Different Types Of Learning In Machine Learning

Types Of Machine Learning Algorithms Ppt Online 50 Off Www Propellermadrid Com